Proxmox VE Advanced: Clustering, High Availability & Disaster Recovery

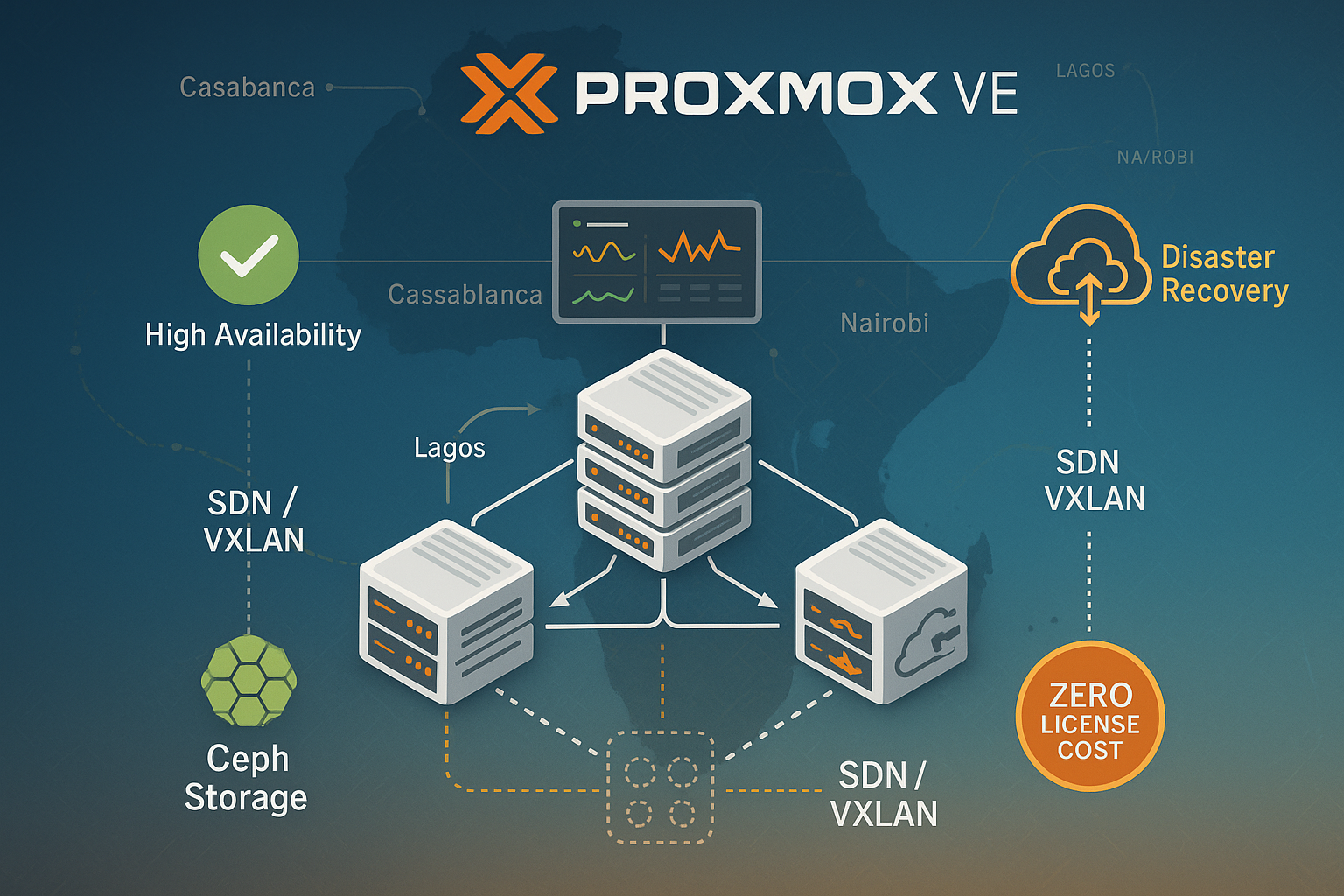

Master advanced Proxmox VE clustering with High Availability, Ceph hyper-converged storage, SDN with VLAN/VXLAN, and enterprise disaster recovery strategies. Hands-on 4-day training for production-grade deployments.

Training objectives

Upon completion of this training, you will be able to:

- Design and deploy production-grade Proxmox VE clusters with 3+ nodes

- Implement High Availability (HA) for automatic failover and zero-downtime operations

- Configure Ceph storage for hyper-converged infrastructure with RBD and CephFS

- Master Software-Defined Networking with VLAN, VXLAN, and EVPN configurations

- Build disaster recovery strategies with Proxmox Backup Server and live restore

- Automate cluster operations using REST API and command-line tools

- Troubleshoot complex scenarios including split-brain, storage failures, and network issues

- Optimize performance for production workloads and resource allocation

- Implement security best practices for clustered environments

- Plan capacity and scaling for growing infrastructure needs

Target audience

This training is designed for:

Senior System Administrators

Experienced professionals managing production virtualization environments who need to implement high availability and disaster recovery solutions

Infrastructure Architects

Responsible for designing resilient, scalable infrastructure solutions using open-source technologies as alternatives to VMware or Hyper-V

DevOps Engineers

Seeking to automate infrastructure deployment and management with API-driven approaches and Infrastructure as Code

Cloud Engineers

Building private or hybrid cloud solutions with enterprise-grade availability and performance requirements

IT Managers

Technical decision-makers evaluating Proxmox VE for mission-critical workloads and cost optimization strategies

MSP Professionals

Managed Service Providers implementing multi-tenant infrastructure with advanced networking and isolation requirements

This advanced training is particularly relevant for organizations in French-speaking Africa seeking sovereign, cost-effective alternatives to proprietary virtualization solutions.

Prerequisites

Technical Prerequisites

Required

- Proxmox VE Experience: Minimum 6 months managing Proxmox VE in production or completion of "Proxmox VE Fundamentals" training

- Linux Administration: Advanced command-line skills, systemd, networking, and storage management

- Networking Expertise: Deep understanding of VLANs, routing, switching, and TCP/IP stack

- Virtualization Knowledge: Experience with KVM, storage concepts, and resource management

- Basic Scripting: Bash scripting abilities for automation tasks

Recommended

- Experience with distributed storage (Ceph, GlusterFS, or similar)

- Understanding of BGP and EVPN concepts

- Familiarity with REST APIs and JSON

- Basic Python knowledge for advanced automation

- Experience with backup and disaster recovery planning

Lab Environment Requirements

Each participant needs access to a lab environment with:

- Minimum 3 physical servers or nested virtualization capability

- 64GB RAM total across all nodes (minimum 16GB per node)

- 500GB storage space for Ceph OSDs and VM storage

- Dedicated network for cluster communication (10Gbps recommended)

- Internet access for package updates and documentation

Detailed program

Detailed Training Program

Day 1: Advanced Clustering Architecture

Module 1: Proxmox VE Cluster Deep Dive (4h)

- Cluster architecture review and components

- Corosync cluster engine and communication

- Proxmox Cluster File System (pmxcfs) internals

- Quorum concepts and split-brain prevention

- Advanced cluster networking

- Redundant cluster communication links

- Network latency requirements and optimization

- Multicast vs Unicast configuration

- Cluster scalability and limits

- Node count considerations (tested up to 50 nodes)

- Performance implications of cluster size

- Geographic clustering possibilities

- Advanced cluster management

- Node addition and removal procedures

- Cluster recovery from various failure scenarios

- Backup and restore of cluster configuration

Build a 3-node cluster with redundant corosync links, simulate network failures, and practice cluster recovery procedures

Module 2: High Availability (HA) Implementation (3h)

- HA architecture and components

- HA Manager (ha-manager) internals

- Local Resource Manager (LRM) and Cluster Resource Manager (CRM)

- Fencing mechanisms and watchdog timers

- HA configuration and policies

- Resource states and state machines

- HA groups and migration priorities

- Custom HA policies and constraints

- Failure detection and recovery

- Node failure scenarios and automatic recovery

- Network partition handling

- Storage failure impact on HA

- HA best practices

- Hardware requirements for reliable HA

- Testing HA failover scenarios

- Maintenance mode and planned migrations

Configure HA for critical VMs, test various failure scenarios, implement custom HA policies

Day 2: Ceph Hyper-Converged Storage

Module 3: Ceph Storage Architecture (4h)

- Ceph fundamentals for Proxmox VE

- RADOS architecture and object storage

- Ceph monitors, managers, and OSDs

- CRUSH map and data placement

- Deploying Ceph on Proxmox VE

- Hardware requirements and recommendations

- Network design for Ceph (public/cluster networks)

- OSD deployment strategies (BlueStore)

- Ceph pools and performance tuning

- Pool creation and replication factors

- Erasure coding for space efficiency

- Performance optimization techniques

- QoS and bandwidth limitations

- Ceph RBD for VM storage

- RBD image features and snapshots

- Live migration with Ceph storage

- Thin provisioning and space reclamation

Deploy a 3-node Ceph cluster, create pools with different replication strategies, benchmark performance

Module 4: Advanced Ceph Features (3h)

- CephFS for shared storage

- MDS deployment and high availability

- CephFS volumes and subvolumes

- Access control and quotas

- Ceph maintenance and operations

- Adding and removing OSDs safely

- Upgrading Ceph while maintaining service

- Handling degraded states and recovery

- Monitoring and troubleshooting

- Ceph health monitoring and alerts

- Performance metrics and bottleneck identification

- Common issues and resolution strategies

- Disaster recovery with Ceph

- RBD mirroring for site replication

- Snapshot management strategies

- Recovery from catastrophic failures

Configure CephFS, simulate OSD failures, practice recovery procedures, implement monitoring

Day 3: Software-Defined Networking & Advanced Features

Module 5: SDN Implementation (4h)

- SDN architecture in Proxmox VE

- SDN zones: Simple, VLAN, QinQ, VXLAN, EVPN

- Controllers and transport networks

- VNets and subnet management

- VLAN and QinQ implementation

- VLAN-aware bridges and tagging

- QinQ for service provider scenarios

- Inter-VLAN routing strategies

- VXLAN overlay networks

- VXLAN concepts and encapsulation

- Multicast vs Unicast VXLAN

- MTU considerations and optimization

- Performance impact and hardware offloading

- EVPN-BGP advanced networking

- BGP configuration for EVPN

- Multi-site connectivity

- Anycast gateways and distributed routing

- Exit nodes and SNAT configuration

Implement multi-tenant isolation with VXLAN, configure EVPN for distributed networking, test cross-site connectivity

Module 6: Storage Replication & Migration (3h)

- ZFS replication framework

- Scheduled replication jobs

- Bandwidth limitations and scheduling

- Failover and failback procedures

- Cross-cluster migration strategies

- Online migration techniques

- Storage migration between different backends

- Minimizing downtime during migrations

- Backup integration

- vzdump advanced options

- Backup performance optimization

- Snapshot coordination with applications

Configure ZFS replication, perform live migrations between storage types, optimize backup windows

Day 4: Disaster Recovery & Automation

Module 7: Disaster Recovery Implementation (4h)

- Proxmox Backup Server integration

- PBS architecture and deduplication

- Incremental backup strategies

- Encryption and security considerations

- Disaster recovery planning

- RTO and RPO definitions

- Multi-site backup strategies

- Automated failover procedures

- Live restore capabilities

- Instant VM recovery from backup

- File-level recovery options

- Testing DR procedures without impact

- Cluster disaster recovery

- Full cluster backup strategies

- Recovering from total cluster loss

- Configuration backup and restore

- Ceph disaster recovery procedures

Deploy PBS, implement automated DR workflows, simulate disaster scenarios and recovery

Module 8: Automation and Monitoring (3h)

- REST API automation

- API authentication and tokens

- Common automation scenarios

- Python and pvesh scripting

- Ansible integration

- Proxmox Ansible modules

- Automated deployment workflows

- Configuration management

- Monitoring and alerting

- Metrics collection with InfluxDB

- Grafana dashboards for Proxmox

- Alert configuration and escalation

- Troubleshooting methodology

- Log analysis and correlation

- Performance bottleneck identification

- Common issues and solutions

Build an automated deployment pipeline, implement comprehensive monitoring, create runbooks for common scenarios

Certification and Assessment

- Practical assessment: Deploy and troubleshoot a complex multi-tier application

- Written exam covering all advanced topics

- ECINTELLIGENCE Advanced Clustering certificate upon successful completion

- Complete lab guides and automation scripts to take away

- 90-day access to cloud lab environment for practice

- Preparation guidance for Proxmox VE certified professional paths

Certification

At the end of this training, you will receive a certificate of participation issued by squint.

Other training courses that might interest you

Ready to develop your skills?

Join hundreds of professionals who have trusted squint for their skills.

View all our training courses